The code base for this project can be found here and here

For the final project of my Bayesian Robotics class, the only requirement was to come up with a practical implementation of some of the concepts that were taught in the class. As such, my partner and I had an idea to implement real-time risk mapping and tracking for autonomous robots using only a depth sensor similar to the one found on the Microsoft Kinect. Both static and moving objects would be detected, with the static objects having a fixed region of high risk surrounding the object that the robot will want to avoid. For moving objects, the movement of the object itself is tracked and predicted. Once the velocity and direction of the object is found, we can predict where the moving object is likely to be in the near future. This likelihood region maps directly to the areas where we would like the robot to avoid. To achieve this, we used the Asus Xtion Pro sensor along with OpenNI and OpenCV for data acquisition and processing. Qt was chosen as the cross-platform framework for the front-end GUI.

Background

Copied from our report:

As the trend moves towards replacing humans with robots in dangerous environments, many of the behavioral decisions that are instinctively made by humans are often overlooked when implementing robotic AI. Examples of these behavioral decisions include risk analysis, object recognition, and pathing optimization. While these decisions are often made by the pilot in manually controlled robots, autonomous robots often lack these details in their decision making logic. Risk analysis in particular stands out as one of the more complex behavior to model due to its non-deterministic nature. Many factors must be taken into account when creating such complex models. These factors include the merging of data from various sensors of different complexity and dimensions, deriving the current operating state while taking its past history into account, and creating a complex map of possible options where the surroundings are changing.

Here we present a basic implementation of risk analysis where areas of high risk are mapped out through the use of IR and distance sensors. The risk regions for moving objects vary in shape and size depending on the object’s motion, and is derived using a motion model associated with the moving object. Our implementation uses the data from a cheap sensor that is widely available to draw a risk map of a room given the objects in its field-of-view. In addition, the motion path of moving objects are predicted using the same data set.

In an ideal scenario, the robot will have the capabilities to do both room and risk mapping as well as object tracking and motion prediction. In our scenario however, due to limited time, we only implement object tracking and motion prediction. While we are using only one sensor, data from multiple sensors of various types can be easily combined to create a full layout of a robot’s surrounding area. We assume that this capability to create a map of the surrounding area is preimplemented on all autonomous robots.

The Hardware

Originally we had planned on using the ubiquitous Microsoft Kinect for our project, but we ended up finding a few small issues with the device that made it significantly harder to use. The first major issue with the Kinect was its requirement for a 12V power source. As we wanted to power everything off of either the robotic platform or a laptop placed on the platform, the Kinect would have required more time that we didn’t have to add a step-up regulator and other circuitry. The second issue was the size and weight of the device; the first-generation Kinect is actually quite large at 12″ x 3″ x 3″ and heavier at almost 3lb. To get around these issues, we decided to instead go with the Asus Xtion Pro. Both the Xtion Pro and Kinect are comparable in terms of sensor specifications as they both use the same underlying sensor developed by PrimeSense. The Xtion Pro however, is much smaller at 7″ x 2″ x 2″ and only weighs 0.5lb. In addition to the smaller size and weight, the Xtion Pro is powered solely off a USB port, making integration into an existing system a trivial task.

The Kinect and Xtion Pro have nearly identical sensor specifications. The official specifications for the depth sensor are as follows:

- Distance of use: 0.8m to 3.5m

- Field of view: 58° H, 45° V, 70° D (Horizontal, Vertical, Diagonal)

- Depth image size: VGA (640×480) : 30fps, QVGA (320×240): 60fps

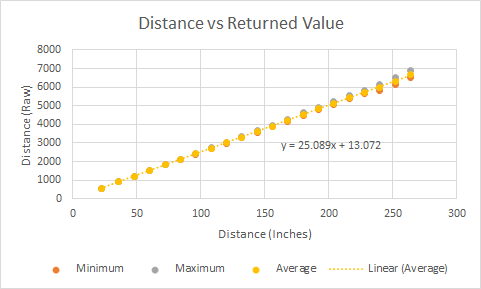

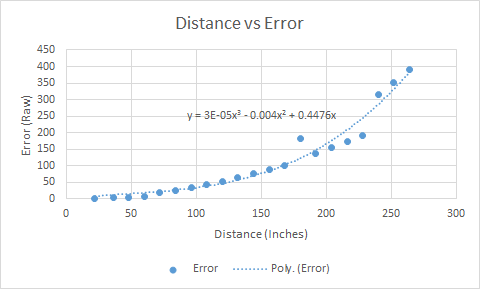

In our testing, we found that the actual usable distance ranged from 1.0m to 6.7m. The error however, increases exponentially with increased distance. Shown in the images on the right, we can see that the returned depth values from the sensor correspond linearly to the actual distance of the object. The measurement error however, increases significantly for points that are further away. This is shown to have an impact later on when motion detection is executed on the depth data.

One thing to note is that the sensor does also include a regular RGB camera in addition to the IR depth sensor. Since our aim is to do motion detection and tracking, we only used the depth sensor for our project as it is easier to track individual objects given depth data than with an RGB image. Tracking objects using only a RGB camera is much harder as objects with the same color will be recognized as a single entity. The data from the RGB camera could certainly be used to augment the data from the depth sensor, but we leave that as an exercise for anyone who wants to use our project as the basis for their own work.

Motion Tracking with Depth Data

Depth data is first acquired from the sensor using the APIs from OpenNI. The depth data is returned as a 16-bit single channel value for each pixel. Only ~13 bits of the depth data is actually useful due to the distance limitation from the sensor. These raw values are then converted to the native image format used by OpenCV (cv::Mat) and then passed to another thread for the actual processing. The application utilizes multiple threads for improved performance, with a thread being dedicated to the GUI, data acquisition, and data processing. An excerpt of the data acquisition code is shown below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 |

#include "OpenNI.h" using namespace xn; extern QSemaphore processorBuffer; void OpenNI::run() { XnStatus nRetVal = XN_STATUS_OK; Context context; ScriptNode scriptNode; EnumerationErrors errors; // Check if the config file exists const char *fn = NULL; if (fileExists(CONFIG_XML_PATH)) fn = CONFIG_XML_PATH; else if (fileExists(CONFIG_XML_PATH_LOCAL)) fn = CONFIG_XML_PATH_LOCAL; else { QString error = QString("Could not find " + QString(CONFIG_XML_PATH) + " nor " + QString(CONFIG_XML_PATH_LOCAL)); emit setStatusString(error); exit(XN_STATUS_ERROR); return; } // Load the config file nRetVal = context.InitFromXmlFile(fn, scriptNode, &errors); // Check to see if a sensor is connected if (nRetVal == XN_STATUS_NO_NODE_PRESENT) { XnChar strError[1024]; errors.ToString(strError, 1024); emit setStatusString(QString(strError)); exit(nRetVal); return; } else if (nRetVal != XN_STATUS_OK) { QString error = QString("Open failed: " + QString(xnGetStatusString(nRetVal))); emit setStatusString(error); exit(nRetVal); return; } // Connect to the attached sensor DepthGenerator depth; nRetVal = context.FindExistingNode(XN_NODE_TYPE_DEPTH, depth); CHECK_RC(nRetVal, "Find depth generator"); // Pull FPS and depth data XnFPSData xnFPS; nRetVal = xnFPSInit(&xnFPS, 180); CHECK_RC(nRetVal, "FPS Init"); DepthMetaData depthMD; short min = 9999, max = 0; // If this point is reached, notify that the sensor is ready emit sensorConnected(); while (1) { // Ensure that the processing queue isnt too long processorBuffer.acquire(1); // Wait for an updated frame from the sensor nRetVal = context.WaitOneUpdateAll(depth); if (nRetVal != XN_STATUS_OK) { QString error = QString("UpdateData failed: " + QString(xnGetStatusString(nRetVal))); emit setStatusString(error); break; } // Mark the frame (for determining FPS) xnFPSMarkFrame(&xnFPS); // Grab the depth data depth.GetMetaData(depthMD); // Grab the size and FOV of the sensor int X = depthMD.XRes(); int Y = depthMD.YRes(); XnFieldOfView fov; depth.GetFieldOfView(fov); emit setFOV(fov.fHFOV, fov.fVFOV); // Show the size/FOV/FPS/frame QString status = "Res: " + QString::number(X) + " x " + QString::number(Y); //status += " / FoV (rad): " + QString::number(fov.fHFOV) + " (H) " + QString::number(fov.fVFOV) + " (V)"; min = qMin(min, (short)depthMD(depthMD.XRes() / 2, depthMD.YRes() / 2)); max = qMax(max, (short)depthMD(depthMD.XRes() / 2, depthMD.YRes() / 2)); status += " / Center: " + QString::number(depthMD(depthMD.XRes() / 2, depthMD.YRes() / 2)); status += " / Min: " + QString::number(min) + " / Max: " + QString::number(max); emit setStatusString(status); emit setFPSString(QString::number(xnFPSCalc(&xnFPS))); emit setFrameString(QString::number(depthMD.FrameID())); // Allocate a new cv::Mat cv::Mat depthData = cv::Mat(Y_RES, X_RES, CV_16UC1); // Save the depth data (mirrored horizontally) ushort *p; for (int y = 0; y < Y; ++y) { p = depthData.ptr<ushort>(y); for (int x = 0; x < X; ++x) { short pixel = depthMD.Data()[(y * X) + (X - x - 1)]; p[x] = pixel; } } // Send the depth data for processing emit processDepthData(depthData); } depth.Release(); scriptNode.Release(); context.Release(); } |

In the depth processing thread, we run OpenCV’s background subtraction, morphological operations, and blob detection to track moving objects. For each new frame of data, we execute the following:

- Update the frame with the latest valid data (not all pixels are valid due to distance/IR shadow/etc).

- Execute background subtraction using OpenCV’s BackgroundSubtractor algorithm.

- Run morphological operations (opening following by closing) to remove noise and fill holes in the mask.

- Average the movement mask’s values over a few frames and erode the results by a bit to ignore object edges.

- Compress the resulting data into a horizontal plane and draw resulting image.

- Draw movement points and run OpenCV’s SimpleBlobDetector algorithm to find center of object.

- Filter object’s center through a Kalman Filter and calculate object’s velocity and direction from past values.

- Drawing resulting velocity and direction vector onto the image.

An image is generated at each step detailed above and is made available for display on the GUI. An excerpt of the data processing code is shown below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 295 296 297 298 299 300 301 |

#include "DepthProcessor.h" // Limit the processing buffer to X frames QSemaphore processorBuffer(3); /** * Here we process the raw data from the sensor */ void DepthProcessor::processDepthData(const cv::Mat &data) { // The 16-bit raw image is passed in via a pointer rawData16 = data; // Save a pixel as valid data if it is != 0 for (int y = 0; y < Y_RES; y++) { for (int x = 0; x < X_RES; x++) { if (rawData16.ptr<ushort>(y)[x] != 0) { lastValidData16.ptr<ushort>(y)[x] = rawData16.ptr<ushort>(y)[x]; } } } // Apply a 5-pixel wide median filter to the data for noise removal //cv::medianBlur(lastValidData16, lastValidData16, 5); // Execute a background subtraction to obtain moving objects pMOG->operator()(lastValidData16, fgMaskMOG, -1); fgMaskMOG.copyTo(fgMaskRaw); // Erode then dilate the mask to remove noise //cv::erode(fgMaskMOG, fgMaskMOG, cv::Mat()); //cv::dilate(fgMaskMOG, fgMaskMOG, cv::Mat()); // Alternative: int kernelSize = 9; cv::Mat kernel = cv::getStructuringElement(cv::MORPH_ELLIPSE, cv::Size(kernelSize, kernelSize)); // Morphological opening (remove small objects from foreground) cv::morphologyEx(fgMaskMOG, fgMaskMOG, cv::MORPH_OPEN, kernel); // Morphological closing (fill small holes in the foreground) cv::morphologyEx(fgMaskMOG, fgMaskMOG, cv::MORPH_CLOSE, kernel); // Average the moving mask's values and shrink it by a bit to remove edges cv::accumulateWeighted(fgMaskMOG, fgMaskTmp, 0.5); cv::convertScaleAbs(fgMaskTmp, fgMaskAverage); cv::erode(fgMaskAverage, fgMaskAverage, kernel); // Get the closest distance in the specified range that is not 0 and convert to inches for (int x = 0; x < X_RES; x++) { ushort min = 9999; ushort rawMin = 9999; for (int y = VFOV_MIN; y < VFOV_MAX; y++) { if (lastValidData16.ptr<ushort>(y)[x] != 0) min = qMin(min, lastValidData16.ptr<ushort>(y)[x]); rawMin = qMin(rawMin, rawData16.ptr<ushort>(y)[x]); } // Convert the raw distance values to distance in inches // Distance (inches) = (raw distance - 13.072) / 25.089; depthHorizon[x] = (min - 13.072) / 25.089; rawDepthHorizon[x] = (rawMin - 13.072) / 25.089; } // Mark the points of detected movements in the movement mask if the threshold is exceeded for (int x = 0; x < X_RES; x++) { int moved = 0; for (int y = VFOV_MIN; y < VFOV_MAX; y++) { if (fgMaskAverage.ptr<uchar>(y)[x] >= FG_MASK_THRESHOLD) moved = 1; } movementMaskHorizon[x] = moved; } // Draw all images drawDepthImages(); drawFOVImages(); // Update GUI with selected image updateImages(); processorBuffer.release(1); } /** * Generate a visualization of the depth data */ void DepthProcessor::drawDepthImages() { // Convert raw data to images to be displayed for (int y = 0; y < Y_RES; ++y) { for (int x = 0; x < X_RES; ++x) { // rawDepthImage rawDepthImage.setPixel(x, y, qRgb(rawData16.ptr<ushort>(y)[x] / (SCALE_DIVISOR / 2), rawData16.ptr<ushort>(y)[x] / SCALE_DIVISOR, rawData16.ptr<ushort>(y)[x] / (SCALE_DIVISOR * 2))); // lastValidDepthImage lastValidDepthImage.setPixel(x, y, qRgb(lastValidData16.ptr<ushort>(y)[x] / (SCALE_DIVISOR / 2), lastValidData16.ptr<ushort>(y)[x] / SCALE_DIVISOR, lastValidData16.ptr<ushort>(y)[x] / (SCALE_DIVISOR * 2))); // lastValidProcessed if (fgMaskMOG.ptr<uchar>(y)[x] == 0) { lastValidProcessed.setPixel(x, y, qRgba(lastValidData16.ptr<ushort>(y)[x] / (SCALE_DIVISOR / 2), lastValidData16.ptr<ushort>(y)[x] / SCALE_DIVISOR, lastValidData16.ptr<ushort>(y)[x] / (SCALE_DIVISOR * 2), 150)); } else { lastValidProcessed.setPixel(x, y, qRgb(lastValidData16.ptr<ushort>(y)[x] / (SCALE_DIVISOR / 2), lastValidData16.ptr<ushort>(y)[x] / SCALE_DIVISOR, lastValidData16.ptr<ushort>(y)[x] / (SCALE_DIVISOR * 2))); } // movementMaskImage movementMaskRawImage.setPixel(x, y, qRgb(fgMaskRaw.ptr<uchar>(y)[x], fgMaskRaw.ptr<uchar>(y)[x], fgMaskRaw.ptr<uchar>(y)[x])); // movementMaskAverageImage movementMaskAverageImage.setPixel(x, y, qRgb(fgMaskAverage.ptr<uchar>(y)[x], fgMaskAverage.ptr<uchar>(y)[x], fgMaskAverage.ptr<uchar>(y)[x])); } } // Draw lines indicating the FOV zones QPainter imagePainter; imagePainter.begin(&rawDepthImage); imagePainter.setPen(QPen(COLOR_DEPTH_FOV, 1)); imagePainter.drawLine(0, VFOV_MIN, X_RES, VFOV_MIN); imagePainter.drawLine(0, VFOV_MAX, X_RES, VFOV_MAX); imagePainter.end(); imagePainter.begin(&lastValidDepthImage); imagePainter.setPen(QPen(COLOR_DEPTH_FOV, 1)); imagePainter.drawLine(0, VFOV_MIN, X_RES, VFOV_MIN); imagePainter.drawLine(0, VFOV_MAX, X_RES, VFOV_MAX); imagePainter.end(); imagePainter.begin(&lastValidProcessed); imagePainter.setPen(QPen(COLOR_DEPTH_FOV, 1)); imagePainter.setBrush(QBrush(COLOR_DEPTH_FOV_FILL)); imagePainter.drawLine(0, VFOV_MIN, X_RES, VFOV_MIN); imagePainter.drawLine(0, VFOV_MAX, X_RES, VFOV_MAX); imagePainter.drawRect(0, 0, X_RES, VFOV_MIN); imagePainter.drawRect(0, VFOV_MAX, X_RES, Y_RES); imagePainter.end(); } /** * Draws the given vector of points onto an image (projected across an arc) */ void DepthProcessor::drawDistanceFOV(QImage &image, QVector<float> &data) { QPainter painter; painter.begin(&image); // Draw the FOV for the raw data painter.translate(X_RES / 2, Y_RES); // Rotate the canvas, draw all distances, then restore original coordinates painter.rotate(-90 - qRadiansToDegrees(fovWidth / 2)); for (int x = 0; x < X_RES; x++) { painter.rotate(qRadiansToDegrees(fovWidth / X_RES)); painter.setPen(QPen(COLOR_DEPTH_POINT, 2, Qt::SolidLine, Qt::RoundCap, Qt::RoundJoin)); painter.drawPoint(data[x], 0); painter.setPen(QPen(COLOR_DEPTH_BACKGROUND, 2, Qt::SolidLine, Qt::RoundCap, Qt::RoundJoin)); painter.drawLine(QPoint(data[x], 0), QPoint(400, 0)); } painter.end(); } /** * Draws the sensor's FOV onto the image */ void DepthProcessor::drawSensorFOV(QImage &image) { QPainter painter; painter.begin(&image); // Draw the sensor's FOV painter.translate(X_RES / 2, Y_RES); painter.setPen(QPen(COLOR_FOV, 2, Qt::DashLine)); painter.rotate(-90 - qRadiansToDegrees(fovWidth / 2)); painter.drawLine(0, 0, X_RES, 0); painter.rotate(qRadiansToDegrees(fovWidth)); painter.drawLine(0, 0, X_RES, 0); painter.end(); } /** * Generate a horizontal visualization of the depth data */ void DepthProcessor::drawFOVImages() { // Draw the raw FOV rawHorizonImage.fill(Qt::white); drawDistanceFOV(rawHorizonImage, rawDepthHorizon); drawSensorFOV(rawHorizonImage); // Draw the last valid data FOV lastValidHorizonImage.fill(Qt::white); drawDistanceFOV(lastValidHorizonImage, depthHorizon); drawSensorFOV(lastValidHorizonImage); // Draw only the movement points along with results of blob detection movementPointsImage.fill(Qt::white); drawMovementZones(movementPointsImage, depthHorizon); convertQImageToMat3C(movementPointsImage, movementPointsMat); blobDetector->detect(movementPointsMat, blobKeypoints); std::vector<cv::Point2f> points; for (int i = 0; i < blobKeypoints.size(); i++) { points.push_back(cv::Point2f(blobKeypoints[i].pt.x, blobKeypoints[i].pt.y)); } movementObjects = movementTracker.update(points); drawKeyPoints(movementPointsImage, blobKeypoints); drawMovingObjects(movementPointsImage, movementObjects); drawSensorFOV(movementPointsImage); // Draw the overlay of movements onto static objects overlayHorizonImage.fill(Qt::white); drawDistanceFOV(overlayHorizonImage, depthHorizon); drawMovementZones(overlayHorizonImage, depthHorizon); drawKeyPoints(overlayHorizonImage, blobKeypoints); drawMovingObjects(overlayHorizonImage, movementObjects); drawSensorFOV(overlayHorizonImage); } /** * Draws the zones of detected movement on the map */ void DepthProcessor::drawMovementZones(QImage &image, QVector<float> &data) { QPainter painter; painter.begin(&image); // Draw the FOV for the raw data painter.translate(X_RES / 2, Y_RES); // Rotate the canvas, draw all distances, then restore original coordinates painter.rotate(-90 - qRadiansToDegrees(fovWidth / 2)); painter.setPen(QPen(COLOR_MOVEMENT_ZONE, 20, Qt::SolidLine, Qt::RoundCap, Qt::RoundJoin)); for (int x = 0; x < X_RES; x++) { painter.rotate(qRadiansToDegrees(fovWidth / X_RES)); if (movementMaskHorizon[x] == 1) painter.drawPoint(data[x], 0); } painter.end(); } /** * Draws the set of keypoints on the image */ void DepthProcessor::drawKeyPoints(QImage &image, std::vector<cv::KeyPoint> &points) { QPainter painter; painter.begin(&image); painter.setPen(QPen(COLOR_KEYPOINT, 6, Qt::SolidLine, Qt::RoundCap, Qt::RoundJoin)); for (int i = 0; i < points.size(); i++) { painter.drawPoint(points[i].pt.x, points[i].pt.y); } painter.end(); } /** * Draws the moving objects along with its ID, velocity, and angle of predicted movement */ void DepthProcessor::drawMovingObjects(QImage &image, std::vector<MOVING_OBJECT> &objects) { QPainter painter; painter.begin(&image); for (int i = 0; i < objects.size(); i++) { QPoint initPoint = QPoint(objects[i].predicted_pt.x, objects[i].predicted_pt.y); // Calculate the line to draw to indicate object movement velocity and angle float velocity_x = initPoint.x() + (objects[i].velocity * cos(objects[i].angle)) * VELOCITY_MULTIPLIER; float velocity_y = initPoint.y() + (objects[i].velocity * sin(objects[i].angle)) * VELOCITY_MULTIPLIER; QPointF predPoint = QPointF(velocity_x, velocity_y); // Draw the object's estimated position painter.setPen(QPen(COLOR_EST_POSITION, 6, Qt::SolidLine, Qt::RoundCap, Qt::RoundJoin)); painter.drawPoint(initPoint); // Draw the object's ID painter.drawText(initPoint.x() + 3, initPoint.y() - 3, QString::number(objects[i].ID)); // Draw the line indicating object's movement velocity and angle painter.setPen(QPen(COLOR_EST_POSITION, 2, Qt::SolidLine, Qt::RoundCap, Qt::RoundJoin)); painter.drawLine(initPoint, predPoint); // Draw the object's running average painter.setPen(QPen(COLOR_EST_AVGERAGE, 6, Qt::SolidLine, Qt::RoundCap, Qt::RoundJoin)); painter.drawPoint(objects[i].historyAvg.x, objects[i].historyAvg.y); } painter.end(); } void DepthProcessor::convertMatToQImage3C(cv::Mat &mat, QImage &image) { uchar *ptr; for (int y = 0; y < Y_RES; y++) { ptr = mat.ptr<uchar>(y); for (int x = 0; x < X_RES; x++) { image.setPixel(x, y, qRgb(ptr[x], ptr[x], ptr[x])); } } } void DepthProcessor::convertQImageToMat3C(QImage &image, cv::Mat &mat) { for (int y = 0; y < Y_RES; y++) { for (int x = 0; x < X_RES; x++) { cv::Vec3b &pixel = mat.at<cv::Vec3b>(y, x); QColor pixColor(image.pixel(x, y)); pixel.val[0] = pixColor.blue(); pixel.val[1] = pixColor.green(); pixel.val[2] = pixColor.red(); } } } |

The results of this implementation is shown in the video at the end this post. Moving points are marked with a large red circle, and blob detection is executed on the red areas to detect individual moving objects. The center of the blob is shown in yellow, while the green dot shows the average over the last ten frames. The blue dot shows the predicted position of the object from the Kalman Filter along with a vector showing the estimated velocity and direction of the object. Furthermore, I wrote some code to assign a unique persistent ID to each moving objects which is displayed alongside of each object.

Object Position and Movement Prediction

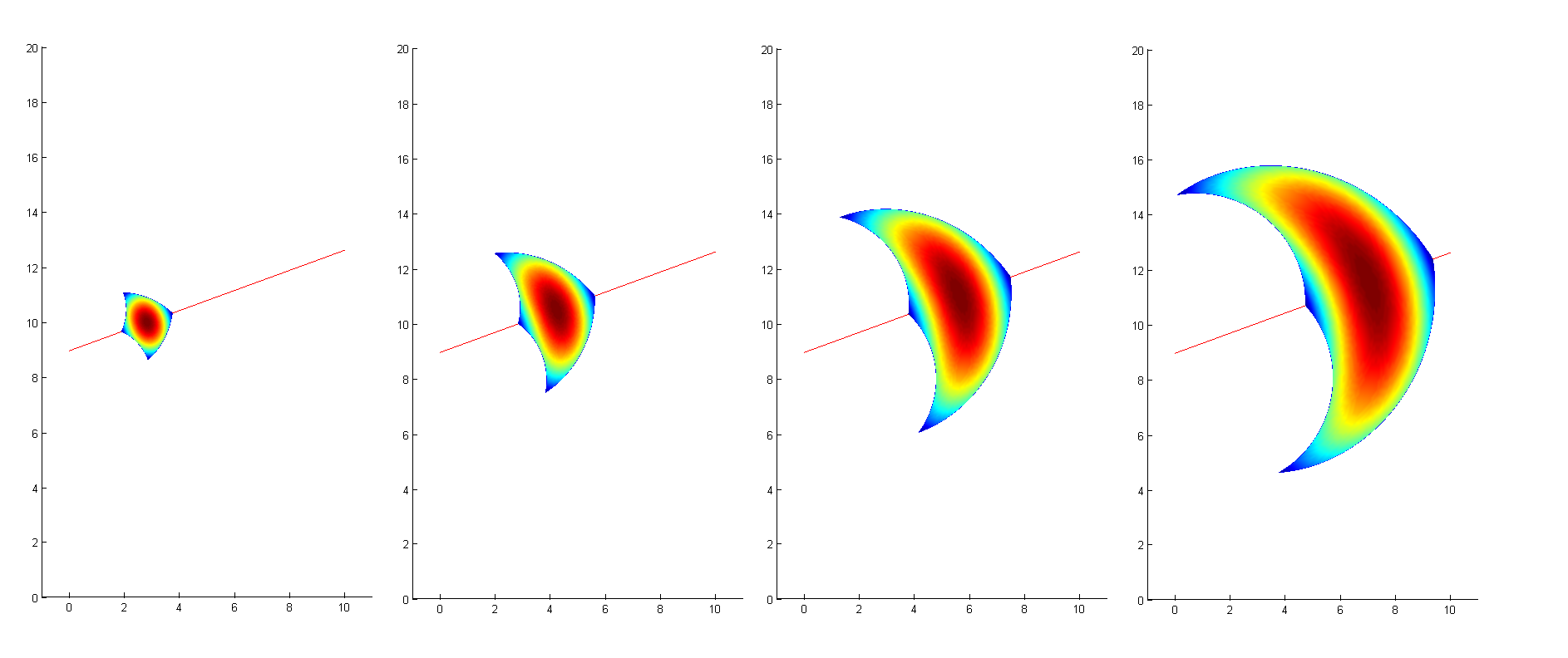

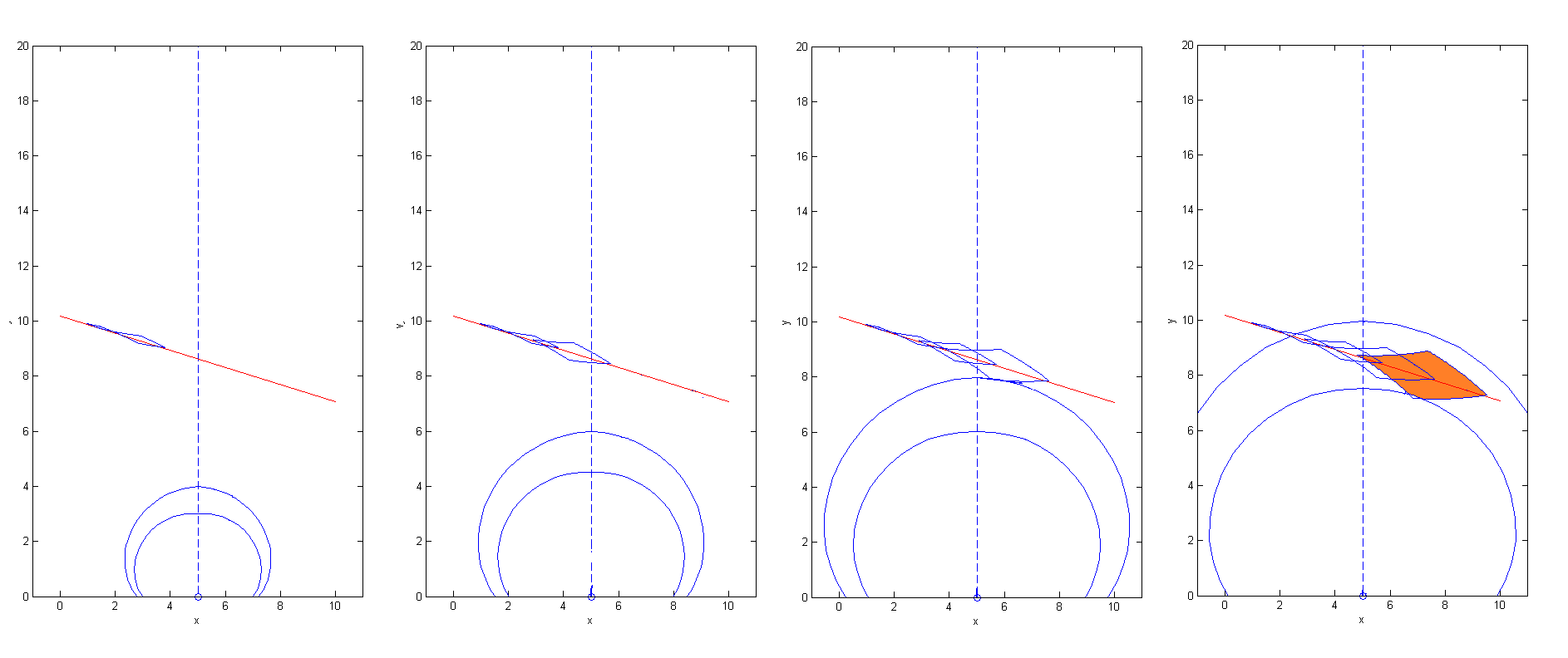

My partner worked on this particular area, so I won’t delve too much into the details here. As we now have the capability to track and predict an object’s movement, we can use this information to calculate the forward reachable set (FRS) of the object, or in layman terms, the probability of where the object will be in the near future.

The FRS for any moving object is going to look something like the above due to Newton’s first law of motion (the concept of momentum). If an object is moving in a given direction at a decent velocity, the likelihood of it suddenly stopping or changing directions prior is very low. This likelihood decreases the faster the object is moving, and increases with longer periods in between measurement updates. The image above shows the likelihood of where an object is predicted to be at a given velocity and an increasing period of time in between updates. Areas where there is a high probability for the object (and thus higher risk) are shown in red, while blue areas indicate low positional likelihood for the object. The blue areas should actually extend quite a bit more, but we have it cropped a bit for easier visualization. The chart shows the different between fast sampling rates on the left and slow rates on the right. A fast sampling rate results in a much smaller likelihood region for the object as the predicted region is corrected more much frequently. As the sampling rate decreases, the object’s positional uncertainty increases.

This prediction has a number of possible uses. As mentioned earlier on, this generated likelihood region directly corresponds to the risk region of a moving object. By calculating the risk region for the robot itself along with moving objects in its field of view, we can combine the risk regions and update the robot’s pathing if any intersections are detected. As shown in the above image, fast moving objects will cause regions that the robot will want to path around to avoid collisions. For our project, we didn’t get around to generating an actual movement path for the robot but pathing can easily be implemented using the A* search algorithm and recalculated with each updated frame.

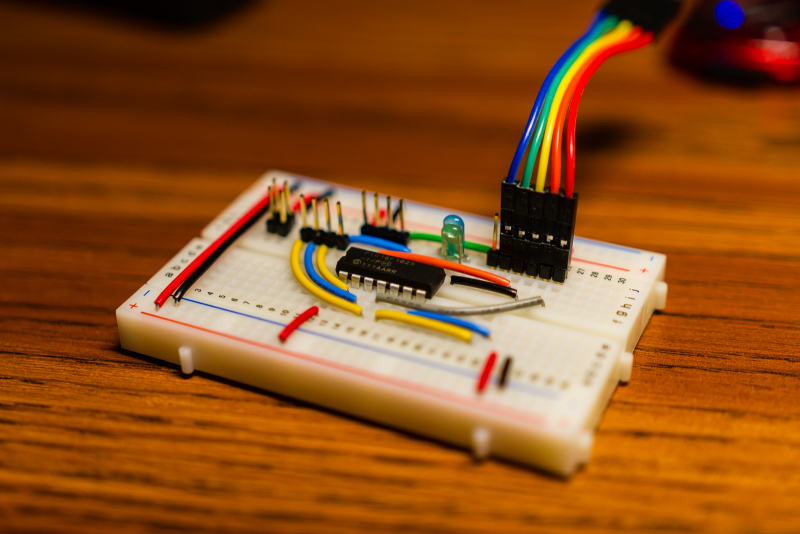

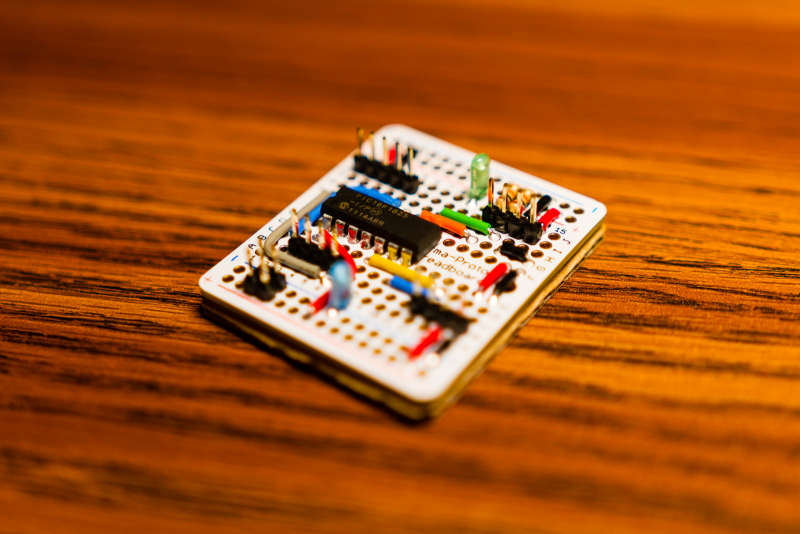

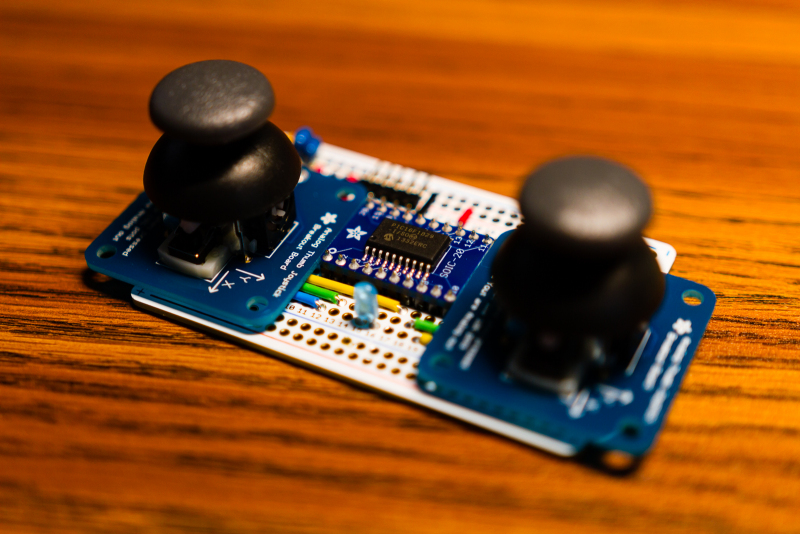

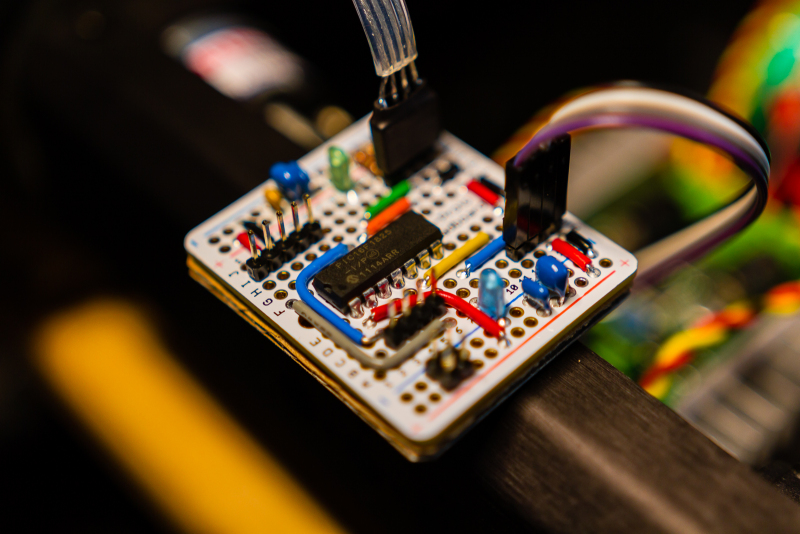

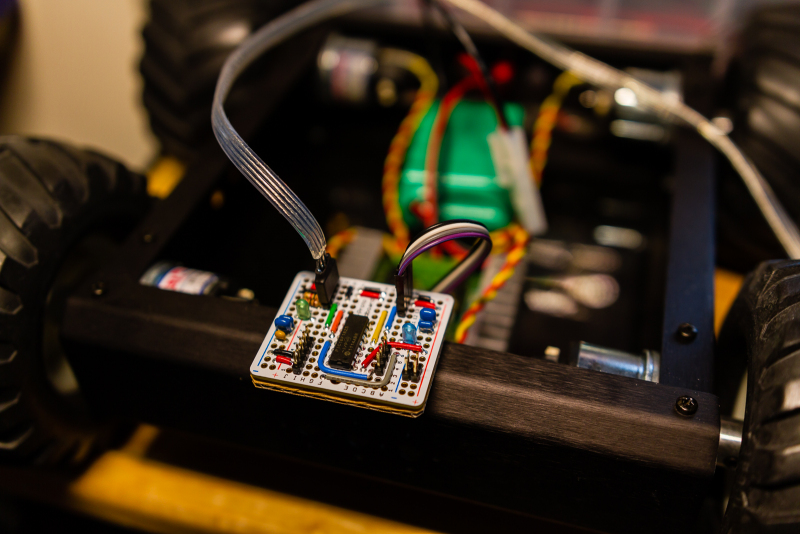

Robotic Platform

While we didn’t get around to implementing autonomous controls, I did put together a basic platform with remote control capabilities. The platform itself was comprised of a A4WD1 base from Lynxmotion along with a Sabertooth 2×12 regenerative motor controller. The controller board for the platform was prototyped using a PIC16F1825 and the optional handheld controller uses a PIC16F1829 along with two analog thumb joysticks from Adafruit. The robot controller board polls the handheld controller through I2C and receives commands from a computer using a RN-42 Bluetooth module. The protocols for controlling the robot has been implemented, but the actual autonomous control code was left unfinished due to a lack of time and looming deadlines from other obligations.

Conclusion

As seen from the video below, this implementation works pretty well for tracking moving objects using only the depth sensor data. If I had more time to work on this project, I would’ve liked to add the following features:

- Plotting the predicted object’s position onto the generated map

- Implement SLAM using OpenCV’s SURF implementation

- Increase object detection accuracy using the RGB video feed alongside of the depth data

- Incorporate real-time path generation between two points with risk area avoidance

- Use a lightweight computer (Raspberry Pi) to wirelessly transfer sensor data to a remote computer

- Optimize code to process data at the full 640×480 resolution